My AI Thesis: Generative AI’s First Year and What’s Next

An Early 2024 Reflection and Forecast After Thousands of Hours Embedded in AI

I. Preface

I started writing this essay on January 7th, 2024. On January 25th, I got a frantic call from my sister. Within a few hours, I was on an international flight to attend to a family emergency thousands of miles away from home. Reeling back from that incident, I tried to finish and publish this writing in May of 2024, but decided not to so that I could double down on quiet reflection and action. Today is October 30th, 2025 and sitting on the tail end of a career break, I decided to publish this. While the core arguments remain as I wrote them in early 2024, I’ve updated references and a few of the context over time. I’m publishing it now, rough edges and all.

In 2023 and 2024, I needed outlets and spaces for discourse and creative explorations — where I and others could talk about and build things that mirrored the wider trajectory of AI. So, while in my 9-5, I found the outlets and created the spaces for myself and others. And I turned to my quiet, unspoken strength of writing to tie it all together. In this and other essays (including my writing archive on Medium), I set off to reflect on 10+ years of a career in tech as a “UX designer” and the emerging AI trends, and to document a journey into the future. Specifically in this essay, I share the result of thousands of hours of immersion—building, thinking, designing, tinkering, learning, etc.— in the current AI wave.

Today, I have finally decided to publish this writing, keenly aware that the AI world has moved on at an incredible pace of progress since I last wrote this — rendering some of what I originally wrote as “old knowledge” but still relevant. Personally, this old news has allowed me to “check the work” of my own thinking and recalculate as needed, albeit a lot of what I wrote about below (e.g. Jevons paradox, AI infrastructure, the UX dilemma, competitors, etc.) has transpired almost exactly as I imagined and penned down.

What you are about to read was born out of deep struggle, love, and creative endeavor. It is extremely long, but could be extremely useful to you — especially if you are still a little unsure about how we got here, what’s important, or what’s coming next.

Cheers ♥️

Note: see appendix at the bottom of this page for disclaimers.

II. Introduction: AI Recaptures Our Imagination

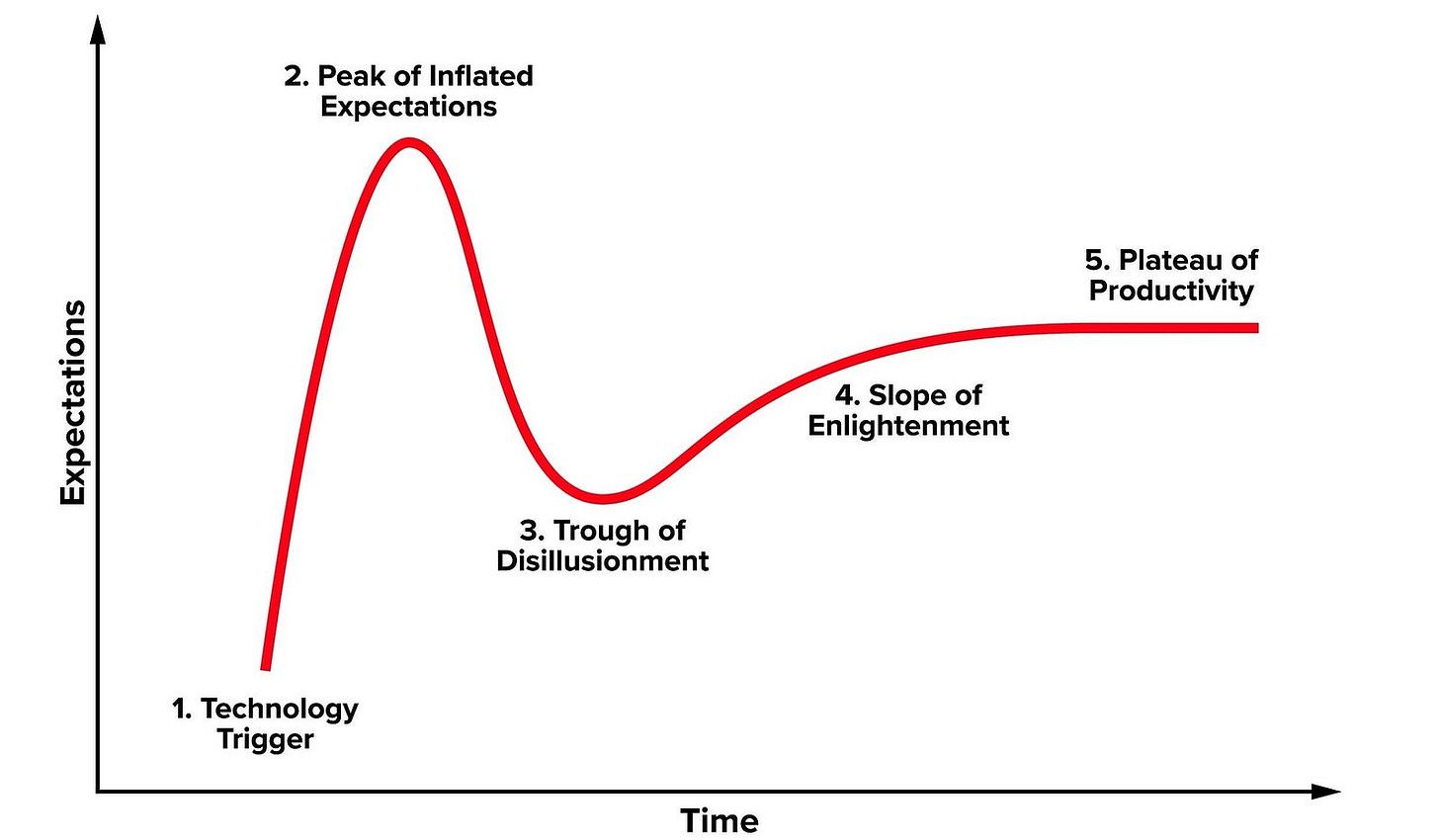

Since 2022, artificial intelligence has felt less like a distant and niche technical concept and more like a sudden powerful force that has sucked everything into its gravitational pull. It has recaptured our collective imagination, sparking cycles of intense hype, profound anxiety, and endless debate. We are clearly in a new “AI Summer,” but the promises of this season will soon fade as we cycle to an inevitable “AI Winter.”¹

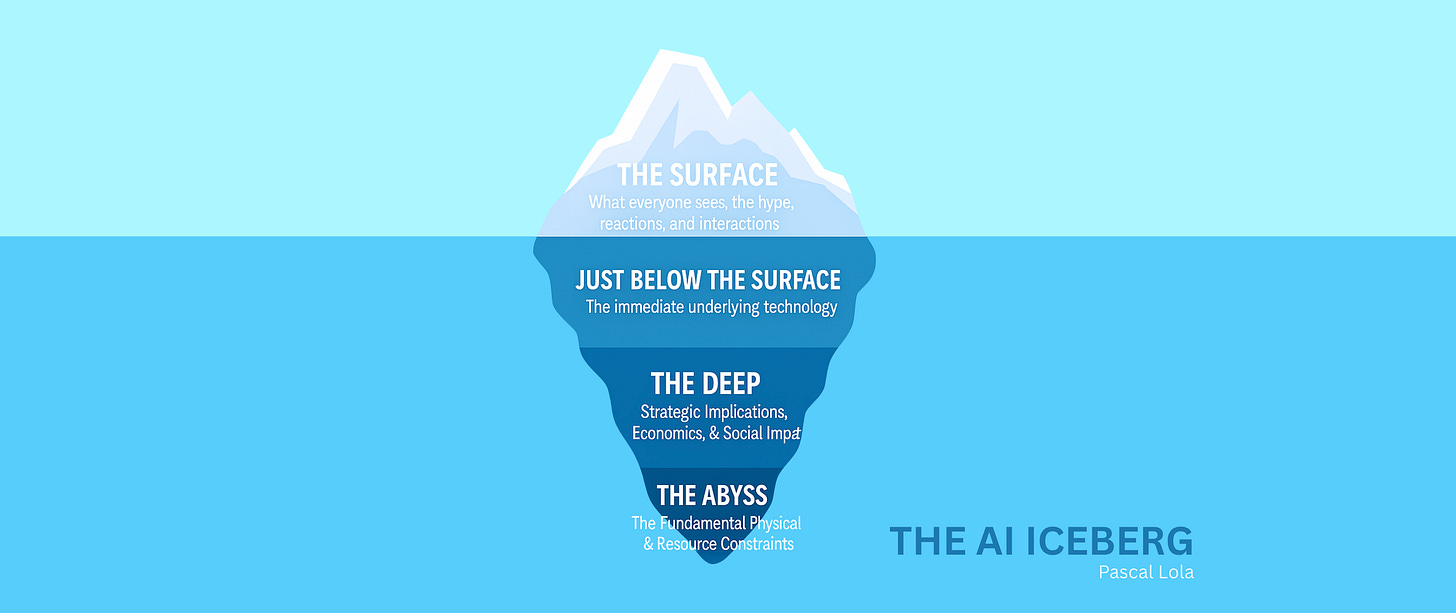

The catalyst for this moment is Generative AI, a technology that has produced everything from jaw-dropping human-like art to fluent chatbots that sometimes feel like trustworthy human-like entities. But this essay argues that the true story of this AI revolution is not happening on the surface with these magical-seeming tools. The real story that will define the next decade is happening at a deeper level.

It’s a story about:

The physical infrastructure of chips and energy that forms the real-world bottleneck to digital intelligence.

The economic war being fought over open- vs. closed-source models and the strategic race for a data-based “moat.”

The professional reckoning for creative fields, particularly User Experience (UX), which must evolve beyond its “pixel-focused value theater” or risk becoming obsolete.

The human response to a destabilizing force, and the timeless need to separate high-signal insights from the “mumbo-jumbo” of the hype cycle.

While I primarily wrote this to crystallize my own thinking, I hope that by reading this, you are better positioned to understand the complex layers of this new era. I hope you can go deeper, reconsider your own journey, and find your footing as we navigate this new landscape together.

Chapter 1: The generative AI catalyst

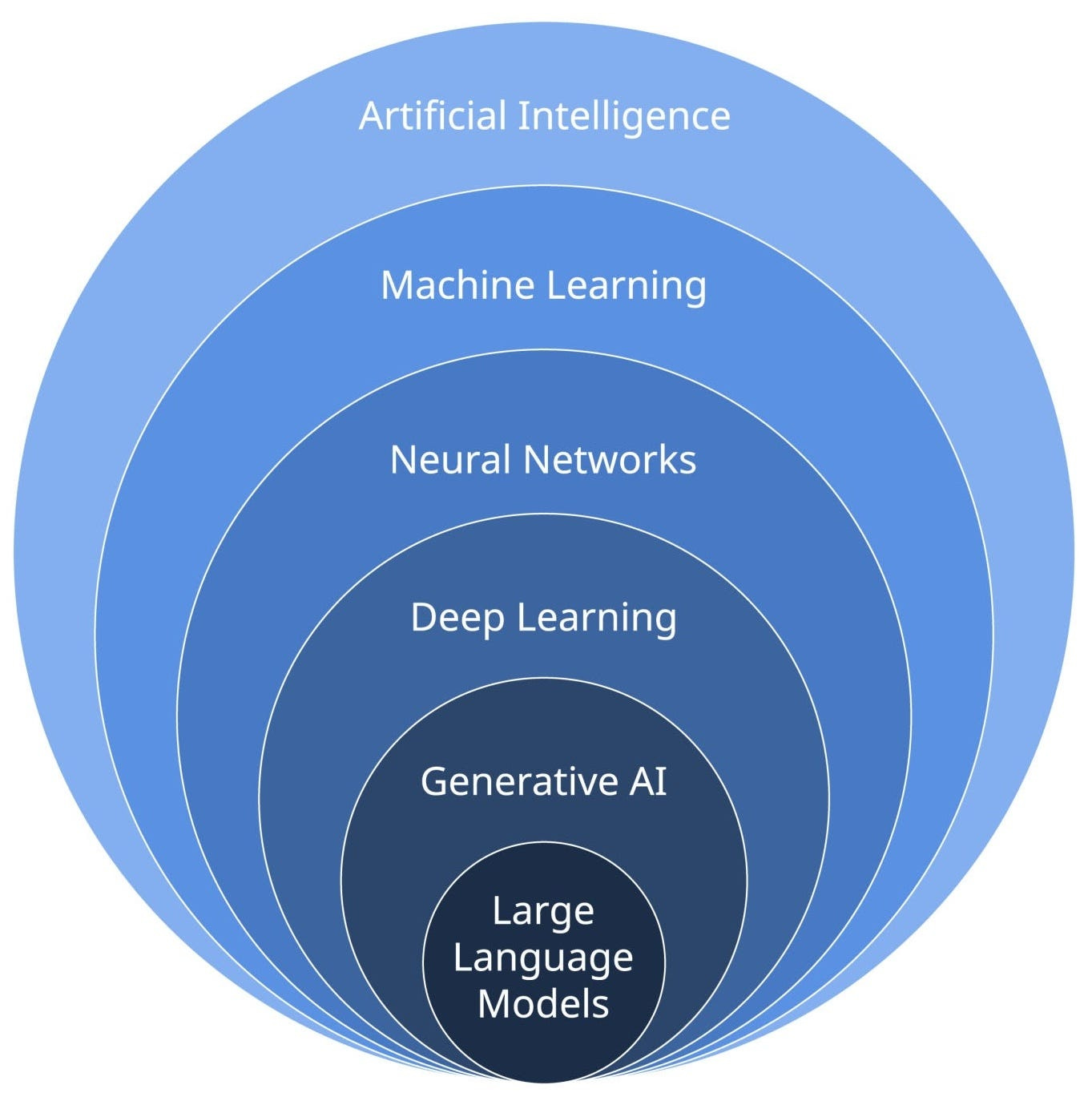

The catalyst for this AI moment is a very specific type of AI called Generative AI. Although generative AI began to gain mass appeal at the end of 2022, it has been brewing in the background since the 1960s and earlier. But instead of getting into a history lesson, I’ll do a very broad summary of generative AI before we dig deeper.

Generative AI includes a range of artificial intelligence technologies that are designed to create digital artifacts which historically only humans have been able to create. Generative AI uses machine learning (specifically deep learning) algorithms to analyze and learn from large datasets composed primary of human-produced content that then enable it to generate outputs that mimic human-like creativity. These outputs can range from written text, visual artwork, music, and more. Generative AI works by understanding the underlying patterns and structures in the data it has been trained on, allowing it to probabilistically predict detailed compositions of digital artifacts that seem both original and realistic compared to human-produced artifacts.

Example: Let’s say you want to generate a picture of a cat using a fictional generative AI tool called CATgpt. This tool was trained by analyzing millions of photos that humans have taken of cats. From this data, it learned the underlying patterns — the typical shape of a cat’s ear, the texture of its fur, the common colors of its eyes. When you ask for a picture, CATgpt probabilistically generates a new set of pixels that it “predicts” will look like a cat to you. Here’s a better analogy: It’s not like asking someone who has never seen a cat to draw one. It’s like asking an artist who has spent their entire life studying every single photograph of a cat in existence to draw a new one from their imagination. They aren’t copying any single photo; they are creating an original image based on the statistical “rules” of what makes a cat look like a cat.

Chapter 2: Generative AI vs. Large Language Models

Mass appeal sometimes comes with drawbacks. And with this AI moment, the way the masses are indiscriminately using the words “AI”, “generative AI”, and “Large Language Models (LLMs)” breeds confusion and risks slowing down innovation down the funnel. Also, we are grappling with the “golden hammer” problem as AI is becoming the buzzword solution to problems that we don’t even understand. And with that, I’ll try to clarify the difference between generative AI, LLMs, and more.

Large Language Models like GPT (from OpenAI), BERT or Gemini (from Google), or other similar models were birthed from a Natural Language Processing innovation of 2017 when the Transformer architecture was introduced². LLMs operate based on the Natural Language Processing (NLP) principles of language understanding and generation. With this, LLMs are: 1) primarily designed for understanding and generating text-based content, 2) trained on large volumes of text data (which humans have generated tons of), and 3) great at tasks like writing, generating conversational replies, summarizing text, categorizing text, extracting sentiments from text, etc. However, while LLMs are a popular example of generative AI, other types of generative AI exist for different purposes:

Image Generation: These models are trained on image data. They learn from vast collections of images and are able to generate new images based on learned patterns and features. They don’t “understand” images in the linguistic sense but rather in terms of pixel patterns, colors, and textures. Examples include OpenAI’s DALL-E or Stability AI’s Stable Diffusion.

Music Composition: These models are trained specifically on audio data. They analyze patterns in music, such as rhythm, melody, and harmony, to compose new music pieces. The training process involves understanding the structure of sound, not language. Examples include OpenAI’s Jukebox or Google’s Lyria.

Speech Synthesis: These models convert text to speech and are trained on both text and audio data. They learn how to pronounce text as a human would, which involves phonetics and audio processing and not just language modeling. Examples include Google’s WaveNet or Amazon’s Polly.

Molecular Science: These models are revolutionizing molecular research in fields such as biology, chemistry, and pharmacology. They are particularly influential in drug discovery, protein engineering, and the design of new materials, where they predict molecular structures or outcomes of chemical reactions and interactions. Trained on data about chemical properties and interactions, which differs significantly from natural language, these models offer new ways to understand and manipulate things/substances at the molecular level. An example of this technology includes Google’s AlphaFold and Adaptyv Bio’s protein engineering platform.

Note: I am particularly terrified of AI intersecting with biology.

Each of the above uses specialized AI training that is appropriate for the type of data and the specific tasks they are designed to perform. The training data, model architecture, and intended output for these models differ from those used in LLMs. So, while LLMs are catalysts to this AI moment and enable one type of generative AI, the broader generative AI landscape includes various specialized models that are trained on different types of data — not just text. And beyond generative AI, there is an even wider non-generative AI landscape that is very important to understand. Although we won’t dive into the full AI landscape in this essay, you can read a little more about the other AI technologies in Chapter 5, titled “Beyond Generative AI”.

Chapter 3: Data and AI model training

You’ve probably heard the statement “data is the new oil”. This metaphor is often used to talk about the important role of data in powering digital experiences and shaping modern economies the way oil powers physical (and digital) machinery and also shapes modern economies. Thus, even now, data is king! Data is especially important during the stage and process called AI model training.

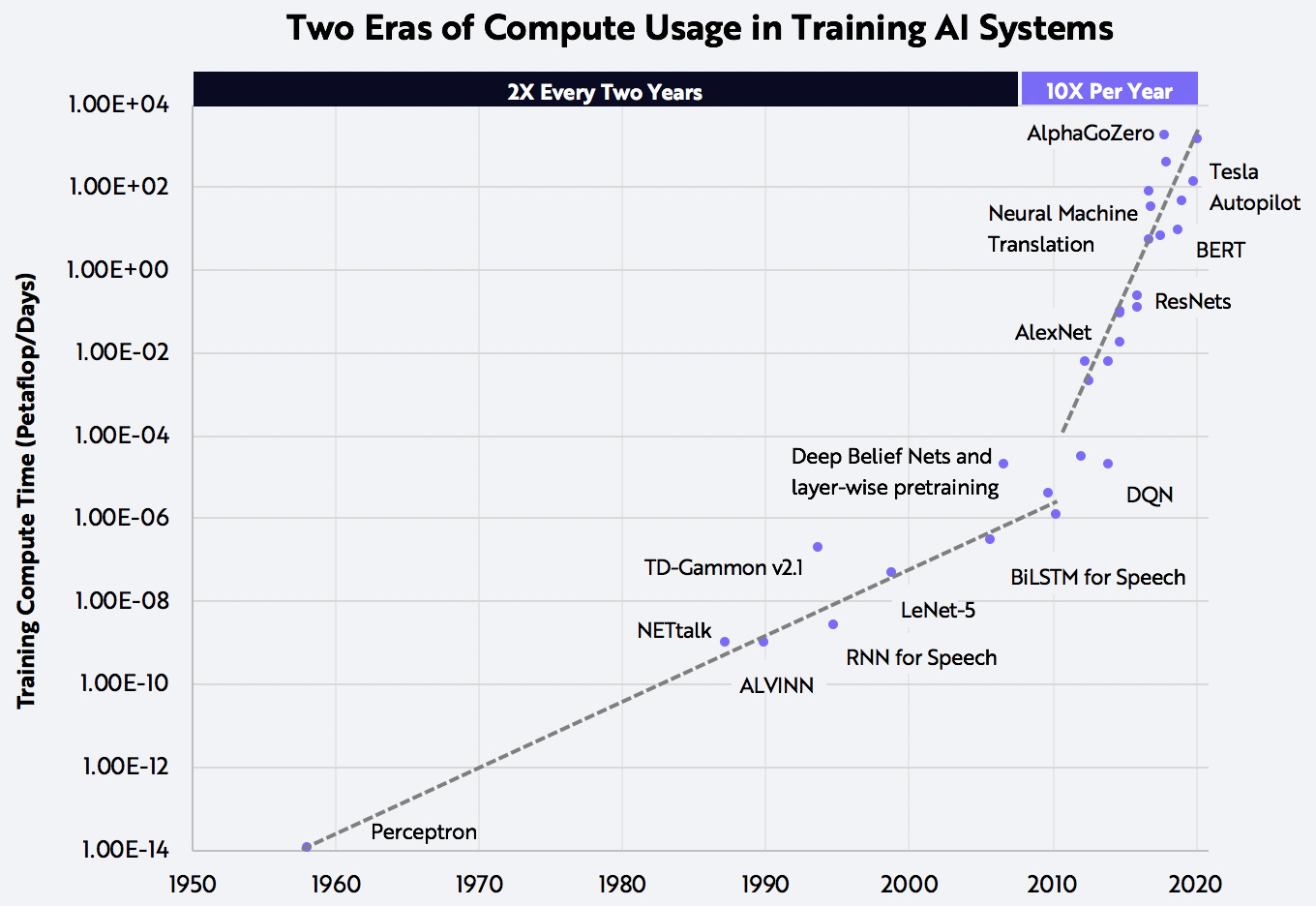

Training an AI model is one of the most important and difficult steps³ in the creation of AI-powered products and experiences, especially when it comes to generative AI. The best AI models require significant investments across the data value chain and pipeline, in addition to gargantuan investments in AI model training infrastructure (e.g. hardware, software, energy, time, etc.). This resource intensity is a barrier to entry for the “little guys”. However, things are slowly changing. The current progress of AI model training seems to be outpacing Moore’s Law⁴ (at least analogically since Moore’s Law is about transistors on chips and not AI).

Both hardware and software are improving in effectiveness and efficiency, which means costs will also decrease⁵. In a decreasing cost environment, big and “little guys” have somewhat of a level-playing field (although Marc Andreesen recently smacked us with Jevons paradox of increasing costs⁶).

So as the cost of training decreases, as more models are open-sourced and become commoditized, proprietary data becomes a greater differentiator. And proprietary data is often about infusing a “unique” recipe to create a differentiating experiences.

Example: For an illustrative scenario⁷, consider CAT Inc. is the maker of CATgpt, which spends $5M to train its AI model using widely available data on cats (books, articles, photos, etc). A few years later, BetterCats achieves similar results for just $1M thanks to advances in AI model training. CAT Inc.’s initial investment might seem poorly judged given BetterCats started with an 80% discount. But if CAT Inc. could secure exclusive access to top-tier veterinary clinical data, they could enhance their AI model to offer unique features like diagnosing cat illnesses using photos, video, sound, etc. Could this offer mega business and experiential competitive advantage over BetterCats? Ya bet!

III. Beyond the Basics

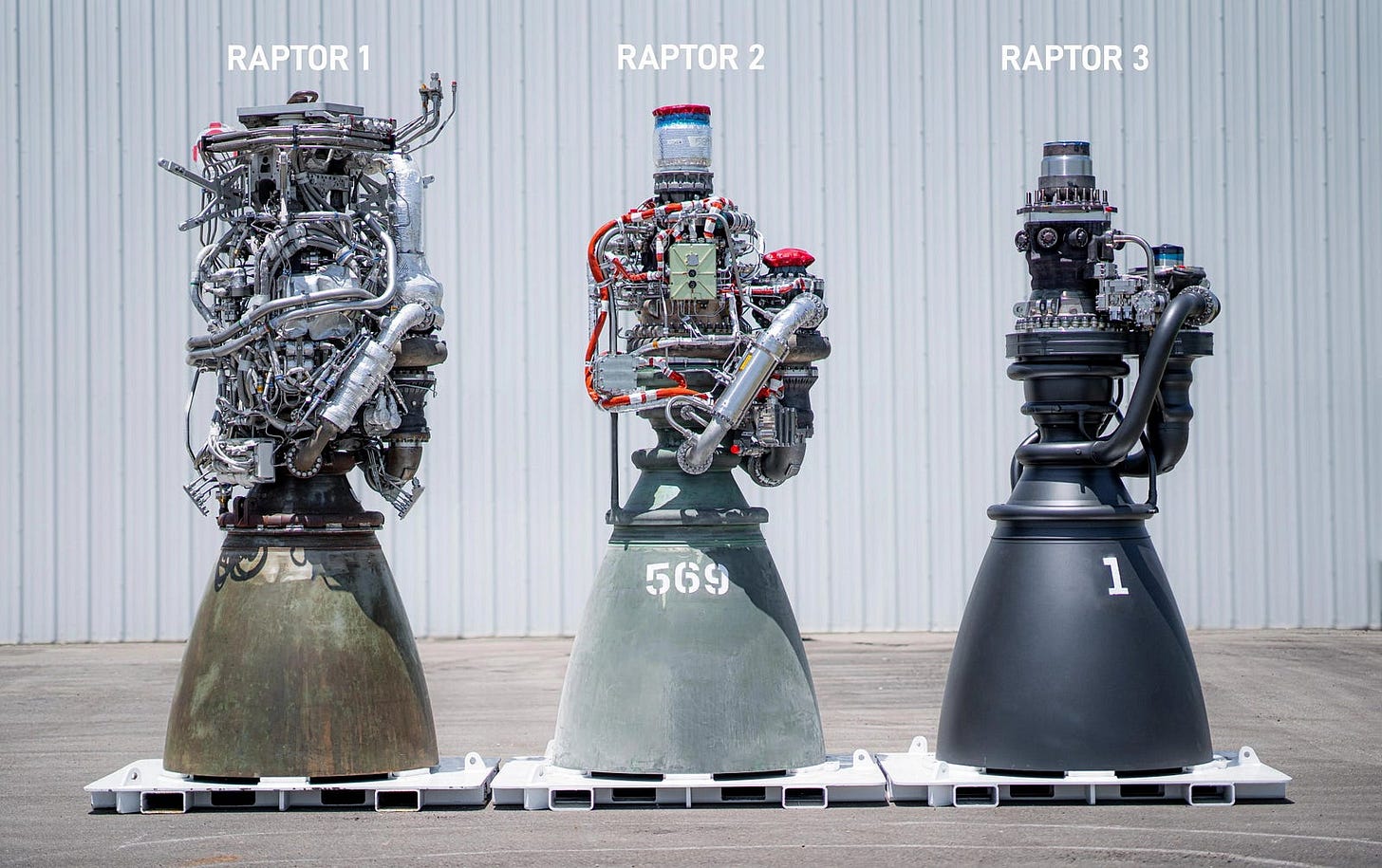

Chapter 4: AI Infrastructure is The End Game

The path to scaling the business and adoption of generative AI and other AI technologies will not happen without significant investments in AI infrastructure, which includes both the “hardware and software components that are necessary for building, training, and using AI models”⁸. But as the tech adage goes: “hardware is hard”. And when it comes to this AI moment, hardware represents a large percentage of the cost and effort in the AI model creation process. Therefore, a substantial amount of attention and investments have been pouring into this space in order to drive costs down⁹ and make hardware less hard — improving both effectiveness and efficiency in AI model training.

On the AI model training side, Nvidia has been leading the way, much to the benefit of its shareholders. However, many companies are sprinting to build their own specialized AI chips, eating away at Nvidia’s margins while gaining more cost and infrastructure control. The competition in the current AI hardware marketplace is berserk. The good news is: it will reshape industry dynamics, and outside of Jevons Paradox⁷, it should lower costs and barriers to entry and adoption for many.

From a software lens, one of many examples of innovation is the reduction of software complexity — and consequently the cost of LLM training. For example, Andrej Karpathy, formerly of Tesla and now at OpenAI (although by the publication of this, Andrej left OpenAI), is addressing these challenges by exploring more efficient AI model training methods through code simplification¹⁰. He is working on a project that involves communicating with the computer in C, a very low-level language which also minimizes the need for external libraries in the training stages of LLM development. This is because lower-level languages can offer more direct control over hardware, potentially leading to optimizations that higher-level languages might not easily permit.

And, needless to say, simpler is often better!

Chapter 5: Beyond Generative AI

It’s time to go beyond the hype, and tone down our infatuation with LLMs and generative AI. While the advancements in LLMs and generative AI are exciting and powerful, it’s best to approach the hype with skeptical optimism, maintain a balanced perspective, and recognize the value of other AI applications and technologies like: robotic process automation, computer vision for medical imaging, predictive analytics for climate modeling, recommendation systems, autonomous vehicles, and many more. The future of AI might not be as heavily focused on generative AI as the hype suggests. Generative AI still faces significant limitations, such as the accuracy and reliability of the information it produces¹¹, significant energy constraints, and more.

The evolution of AI and of technology transcends generative AI models. The most engaging and powerful digital experiences of the future will involve a combination of generative AI, non-generative AI, and non-AI capabilities. Therefore, it’s really important for product makers and marketers to understand and form a strong perspective on this in order to more effectively shape experiences for people. We have to push back against the “golden hammer” problem by more intelligently talking about and identifying the right AI or non-AI solutions to the right problems. Right now AI is a mumbo-jumbo-googly-doogly-do magic word that “delightfully” takes the user’s “pain-points” away.

Lastly, I have appreciated one of the AI Godfathers and Meta’s Chief AI Scientist, Yann LeCun, and his advocacy for a more nuanced understanding and expectations of LLMs and generative AI. As he recently said:

“LLMs are trained with 200,000 years worth of reading material and are still pretty dumb. Their usefulness resides in their vast accumulated knowledge and language fluency. But they are still pretty dumb…As long as AI systems are trained to reproduce human-generated data (e.g. text) and have no search/planning/reasoning capability, performance will saturate below or around human level.” — Yann LeCun

Chapter 6: The AI Doom-Bloom Continuum

Let’s remember that technologies, such as AI, are essentially neutral tools, but when placed in the hands of humans, they can be employed for both good and ill. In this light, AI has the potential to significantly enhance society, although there are also ills that we’ll have to grapple with. By removing the barriers to entry that individuals and smaller businesses often encounter in their respective markets, AI can democratize skills and knowledge that were once the exclusive domain of a few large corporations and individuals. Democratization creates opportunities and competition — giving more people a better shot at securing the golden eggs of capitalism, or whatever else they so desire.

And with the ills, we will contend. That’s just part of life, given nothing is purely good — on the other side of “good” is often the opposite. But be careful to not be overly focused on the ills and the “not-so-good” of AI. Check out Pessimist’s Archive to jog your memory on the “hysteria, technophobia and moral panic that often greets new technologies, ideas and trends.” But at the same time, let’s neither underestimate nor ignore human propensity towards selfishness at the cost of others.

Chapter 7: Open vs. Closed AI

Some say that open-source models will mitigate some of the harm that could be caused by AI. Regardless, the battle between open-AI and closed-AI is extremely important, and with profound implications for the future of AI and business viability. Open-sourcing technologies like large language models can strategically undermine competitors’ economic standing by providing a powerful, free alternative that diminishes the unique value of proprietary solutions. This approach not only dilutes a competitor’s market advantage but also forces them to further innovate to differentiate their offerings. Moreover, adopting an open-source model serves as a protective moat, preventing companies from being overly dependent on platforms controlled by others, such as with Apple’s control over its ecosystem. This platform control can significantly benefit the originator but poses considerable challenges for others who must build within or on top of an established framework.

Chapter 8. Global Competition & U.S. Dominance

We are in an AI inflection point, with an exciting trajectory, but also marked by hyper-competition. The path forward will undoubtedly be a volatile one; but make no mistake: nothing short of a cataclysmic event will stop humans from innovating and progressing. This is especially true for individuals, organizations, and nations that want to “catch up” and have the grit and resources to do so. While some are experiencing the “Innovator’s Dilemma”, others are risk-on and marching forward.

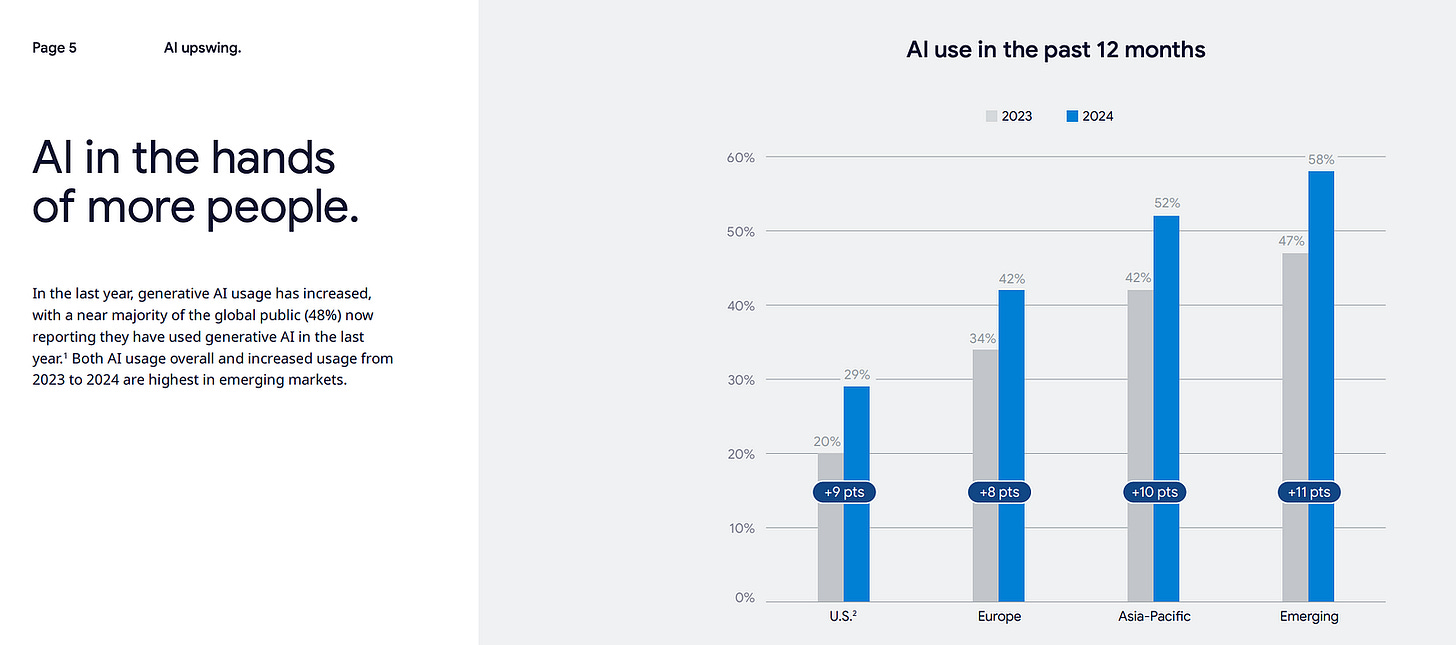

A 17,000-person global survey conducted by Google and Ipsos shows early indications that there might be more optimism in emerging markets. At face value, you would think that emerging markets have the most to lose in a world of AI and the automation it can enable. But, it’s quite the contrary since emerging markets are less tethered to the success of how things have been. The emergence of new innovation and economies thereof spread in somewhat unpredictable ways, mainly due to the democratization of tech and the human desire for progress.

However, as a biased believer and stakeholder in “American exceptionalism”, the United States, to me and many others, remains the most fertile ground for new-era innovation. In the United States, the business and ideas landscape is rife with both struggle and marvelous wonder as people and organizations compete for limited resources and dominance, similar to the story life on our planet. This environment will bring about the best for the whole and more people than ever will benefit from the prosperity AI will create. I know that’s not a popular thing to say, but I will be dishonest to say anything else.

Lastly, there are 3 underestimated (or perhaps silent) competitors that will eventually shape the AI landscape unlike no one else:

Google: a heavyweight temporarily caught in the stumble

TBD small company that no one cares about right now

China

IV. Implications for (UX) Design

Chapter 9: Pixels are Becoming More Free

The costs and effort associated with generating pixels have been going down with every advancement in digital technology and generative AI has dramatically accelerated that trend. This reality hasn’t sunk in for most of those who make their living making pixels like it’s 1998.

Unfortunately UX will also be purged of its inflated pixel-focused value theater (i.e. supply and demand). And right now and for a few short years, it will be easier to either stick your head in the sand or to pretend to be embracing the moment yet dragging your feet in bureaucracy, gate-keeping (opposite of adapting), and fear. But eventually, the world will move forward with more efficiency and effectiveness in the ecosystem of product making and experience shaping. This doesn’t mean that the beauty of aesthetics and the craft thereof will be reduced to utilitarian garbage, but in fact, the opposite.

The shaking coming to UX will make UX better. The ones who will thrive will not necessarily be the ones with more education or more experience, but the ones with the greater sense of adaptability, clarity, curiosity, generosity, open-mindedness, and humble experimentation with AI tools. Also the ones who will keep learning to write — writing about what they are learning and building. One can’t learn to think without first learning to write.

“There is nothing like writing to force you to think and get your thoughts straight”. — attributed to Warren Buffet

Chapter 10: UX Skin in the Game

As AI shifts everything, there is an opportunity for UX to go back to its Human core. AI will make it easier to do critical thinking, and to automate certain tasks and actions. AI (and technologies in general) will make it easier to figure out the “how” for doing and building things. But, the “why” and “what” we build are really important and tough questions that will become even harder to outsource to disembodied entities that don’t yet have a great stake in, or understanding of, the human spirit (or even real world physics). But with the “Human” part of UX, of Human-Centered Design, of Human-Computer Interaction, we can finally enter another creative renaissance.

As mentioned earlier, experience will become the key differentiator as AI models become more commoditized. And it is my hope that design-minded, human-centered, creative-engineers, with go-getter attitudes, regardless of what their diploma says or doesn’t say, will and should build this future.

Unfortunately, as of now, it seems that more engineers and developers are harder at work while “UX” is busy working on (and mostly talking about) yesterday. Based on my limited observation, I presume that a majority of UX practitioners are absent (and some are lackadaisical as a survival tactic) about the threshing floor of this AI moment and how it’ll impact the next decade or two of modern civilization.

To not blame it squarely on UX, most humans in general usually don’t have enough skin in or the right skin for the game, regardless of the “game”. But that’s a different topic. But, let’s wait and see what happens, because after all, UX (and its human-centered practice variations) helped lay the foundation for the AI moment we now find ourselves in.

Note: special thanks to all AI ethics design and research teams who have been laying the foundations for decades.

Chapter 11: UX Moving Forward & AI Maturity

UX should go beyond Figma and question its own sacred cows. UX people should take a Fundamentals to Machine Learning course (or some other “non-UX” topic) not because they will be building any machine learning code, but because it’s critical that UX and everyone reduce the gaps between the disciplines and other silos.

Figma will bring AI to the UX practitioners but Figma is but a tiny slice of what UX touches or does; and therefore, expect new tools that bring more integration and a more seamless path to product making. The path from business opportunity framing, to market and user research and insights, to ideation and prototyping doesn’t have to be fragmented across tools and mediums. Low code and generative interfaces, especially when powered by AI, will game change some parts of the process.

Expect that AI Maturity will become as much of a thing as Digital and Design Maturity. At first no one will know what the hell to do with it, ushering in a short period of consulting bros and well-intentioned execs selling AI (maturity) on a deck, in a meeting, and worst case under fluorescent lights.

But like maturity, it will be mostly organic and influenced by the cultural DNA, and other attributes of the organization. Most organizations will not experience optimal maturity/growth and will be stunted by the seemingly invisible effects (culture) of those who desire to do business as usual, and by a few gatekeepers committed to the status quo and its elusive short-term promises of financial, political, and psychological safety.

And as experience¹² becomes the key differentiator in a world where AI models become more commoditized, UX will still remain in a position to play a critical role in shaping the future — starting with the impact we bring to an organization’s values and cultures, staying anchored to our Human core, and remaining fluid in the mindsets, processes, and tools that sometimes hold us and others back.

V. Variables of Scale

Chapter 12: Scale, Scale, Scale

If there was one word to describe the theme for the next stage of AI innovation, that word would be: scale. Hardware (especially “AI chips”), AI agents, multimodality, governance and regulation are some of the key topics being discussed under the one umbrella aspiration of scaling impact, business viability, ROI, adoption, and more. Every company seems to be going “chip-ham” in figuring out how to deploy AI, including Apple’s alleged plan to boost its sales by upgrading its entire line of Macs.

Hardware is one of the top 3 nodes in the critical path to scale, and so I strongly recommend familiarity with “Stargate”, NVIDIA Blackwell, Meta’s OpenCompute, UXL Foundation, and even this somewhat misrepresented $7 trillion investment by Sam Altman.

On the hardware front, there is a potential for new experiences to be enabled through form factors we hadn’t even considered yet. Jony Ive and Sam Altman might be better positioned to explain why they are seeking $1 billion for their hardware company. Also, there are many initiatives like Humane and Open Interpreter exploring screenless device experiences.

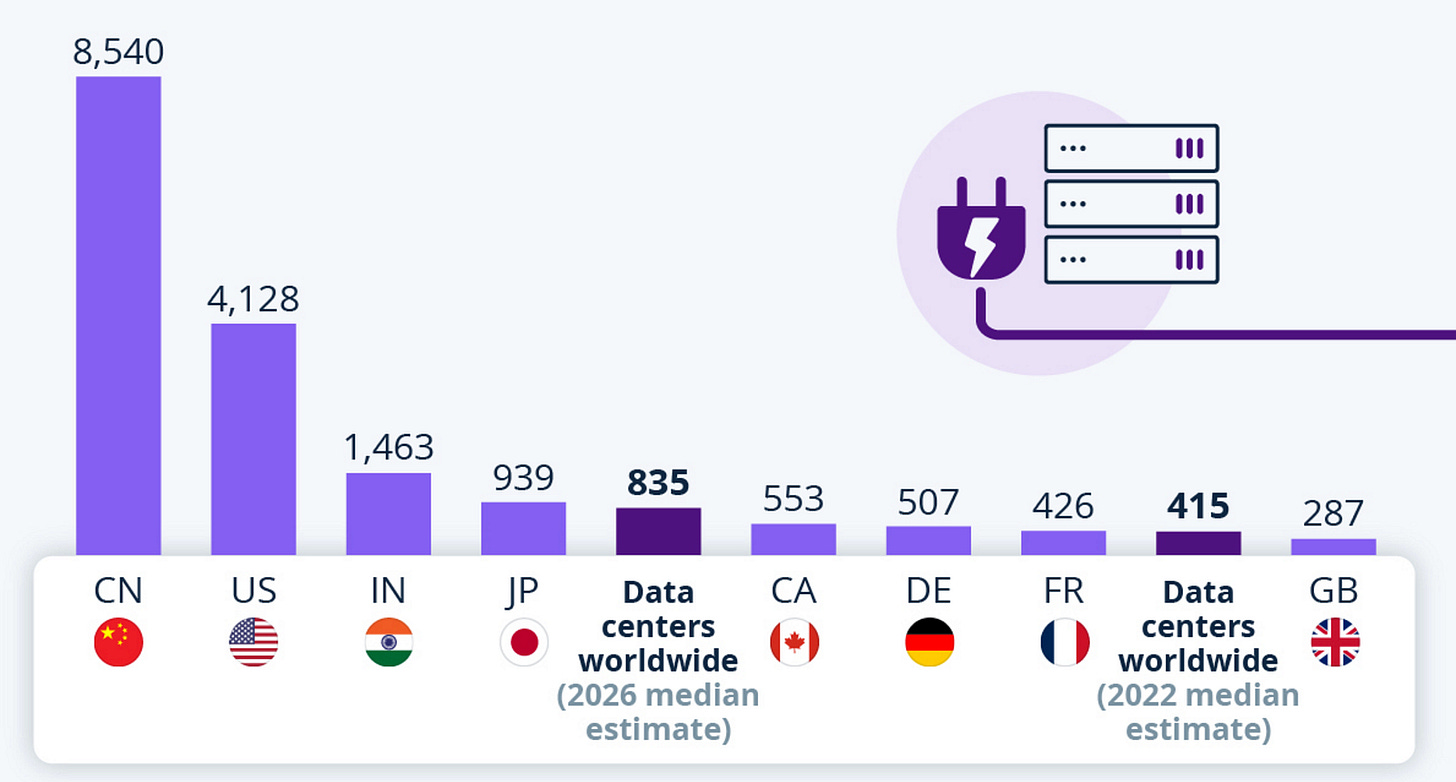

Chapter 13: The Mega Bottleneck of Energy Production

In my professional career, it took me too long to truly appreciate the symbiosis of the physical and digital worlds. Simply stated, digital experiences and the trillion dollar corporations behind them would not exist without the physical infrastructure and natural resources like fossil fuels, rivers and oceans, the sun and wind, and the people of nations like the Democratic Republic of Congo¹³. What we call the cloud, apps, and AI are ethereal elements, built on physical infrastructure powered and constrained by the laws of physics and real-world resource scarcity. Humans have been fighting over resources, especially energy, for a long time. And in this AI era, it continues. Energy is perhaps the most important element needed to scale intelligence, but also expected to be the biggest bottleneck in the supply chain. We are currently on an unsustainable path to powering these data centers running intelligence workloads. This is one of the reasons why nuclear energy is once again becoming an important topic.

VI. Navigating a Destabilizing force

Chapter 14: Savior in Uncertainty

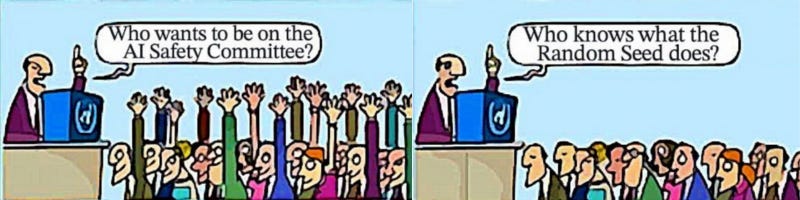

When uncertainty is elevated, humans look for a savior, a person or organization who will save the day and save us from uncertainty and the real or imagined tyrant(s). With this era, there are many saviors being promised. Many voices are creating noise at the moment with some shady behaviors typical of a gold rush event. Both individuals and groups alike are culprits. There is a lot of cluelessness happening which is a function of fear, lack of humility, and genuine cluelessness. Regulation and product making are experiencing a special type of cluelessness.

Chapter 15: Learning, Socializing, and Dead Men

There are macro and micro experiments happening at all levels and facets of society, with AI now being one amplifying force. And it is indeed destabilizing. How do you fight against this destabilizing force? By doubling down on learning and plugging yourself into sources of signal—usually where the masses are not congregated. After all, humans are learning beings from birth. Humans are also social beings; therefore, build relationships with high quality, high agency, and low-ego people. If possible, spend more time with these people in the real physical world. If none, or in addition to, you can read books written by dead men and women.

Note: Know the basics, including the abbreviations and how these concepts are connected: RAG, Agents, LLMs, AI, AGI, Neural Nets, Model Training, Inferencing, NVDA Blackwell, CPUs vs. GPUs vs. NPUs, AI Agents, LMMs, Large Action Models, Weights, RLHF, Small Language Models, Edge Computing and Edge AI, MMLU, Long Term Memory, Chinchilla, etc.

Chapter 16: Think by Writing (without AI)

This article started with the moment I breathlessly jotted down the first key ideas in a Gmail draft, while doing my morning jog. Weeks later, and voilà! I could have probably gone from thought to finished piece in a matter of minutes or hours instead of weeks by using ChatGPT or any one of the generative AI tools in the market. However, even as a tech optimist with some skin in the AI game, I am reluctant in trusting “AI” in my most intimate moments of creative labor (especially writing). I have tried, but NOPE, not yet, too soon. Because writing is a critical part of the thinking and learning process, I wanted to crystallize (for myself first) some of my learnings by writing a summary of my top takeaways and thoughts on AI in this moment. I cannot think as clearly and confidently if I outsource the writing. I love thinking; I love writing — perhaps because it’s the only moment I get to feel like I can overcome my English-as-an-nth-language limitations. Anyway, I digress. The point is: I wrote this to reflect and improve on my understanding of this AI moment, and I recommend that you do the same.

Chapter 17: Take a Step Back, Don’t Burn Out

This is an exciting time to be alive, with or without AI. But please, prioritize your humanity. Humanity and nature have inspired so much progress, so don’t ruin it. Take a step back and consider doing or saying nothing for a set period of time — don’t burn out. After years of avoiding burnout, it finally caught up to me in 2023. I learned 3 things:

No one is coming to save you, no one cares, you are responsible for yourself.

It’s okay to not pursue outward displays of excellence and progress and to focus on internal foundational stuff, like your intellectual, emotional, spiritual, and physical health.

Be ruthless, especially a ruthless advocate of yourself to yourself first (by ruthlessness I mean a stubbornness against mediocrity backed by intellectual rigor and emotional humility).

And whatever you do, don’t be passive, dull, or pessimistic. If you feel burned out, overwhelmed, or growing numb from the shocks of reality, this adult child-like book helped me take a step back and reflect: The Boy, the Mole, the Fox, and the Horse.

Appendix 1: Footnotes

The Transformer Revolution: How it’s Changing the Game for AI

Training great LLMs entirely from ground up as a startup” by Yi Tay

Jevons Paradox of Increasing Costs by Marc Andreessen and Ben Horowitz

Relax! These scenarios have a ton of holes in them and are not perfect.

AI infrastructure explained by RedHat

Navigating the High Cost of AI Compute by Andreessen Horowitz

Explaining llm.c in layman terms by Andrej Karpathy

Improving factuality by Tian et. al.

Experience is a result of a product embodying the values and culture of an organization, creating a flywheel effect of mass adoption, religious-like brand devotion, and business growth.

Are These Tech Companies Complicit In Human Rights Abuses Of Child Cobalt Miners In Congo?

Appendix 2: Disclaimer

Those that know me deeply know that I hold no favorites, nothing too religious, and am always open to expanding my perspectives and changing my mind about most things. With that, here are some things to keep in mind:

I do not know what I do not know, and I am sure in the near future I will cringe at some of what I thought and wrote. After all, a lot of the knowledge and insights I ingested or generated in 2023 will be replaced by new and better knowledge.

Although this is written by a UX practitioner and indirectly to other UX practitioners, expect a broader-than-UX perspective. After all, the most important things to know about this AI moment have very little to do with mainstream UX. That’s an essay for another decade.

Have fun, learn, experiment. Try and fail, let yourself sound stupid and be criticized. Nothing is that serious in the grand scheme — neither AI, nor whatever else we tend to value over simply having fun (with people we like and love).

Appendix 3: Things that Didn’t Make it to the Essay

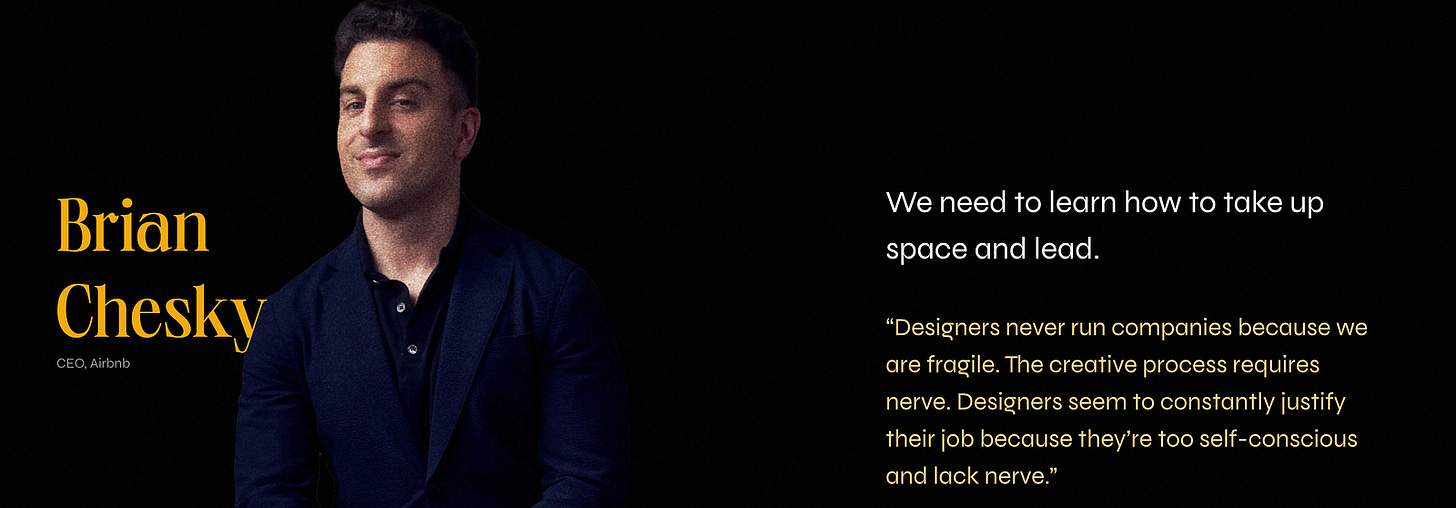

Discuss the many recent topics and articles on Design Leadership struggles, including the Phase Shift episode of “Finding Our Way” podcast. And connect it back to the observations I am making in this AI era.

Discuss the Sam Altman’s video about Oklo. And his quote: “The two most important inputs to a really great future are abundant intelligence and abundant energy.”

Discuss the social and cognitive implications of LLMs, and possibly atrophying our abilities and social skills based on Noam Chomsky thoughts on AI (The False Promise of ChatGPT) and his theories of language (such as language being good for thought).

Add a section on super alignment and the struggle to prepare for AGI, so that AI remains at the service of humans. Use that section to talk about safety and challenges in general. Add the recent news about Ilya Sutskever and Jan Ike: “we are out, we are concerned about AI safety priorities, and the focus on shiny new products.”

In the “little guys” vs. big guys topic: talk about product strategy and how these core models are also disrupting thin business models. When the core model begins to do a lot of what you are building your entire company around, then you will be in deep trouble. When your product begins too close to where the AI ends (as discussed on recent All-in Pod) you will get steamrolled as Sam Altman said. The lesson is don’t simply be a ChatGPT wrapper, you will soon be forced to unwrap!

Talk about how the evolution of human society has been driven by collaboration, and that collaboration relies on communication. But while we often focus on language as the symbolic representation of intent, the vast majority of human communication is factually non-verbal. We rely on tone, body language, and shared context to convey true meaning. This presents a fundamental gap, not just for AI, but for technology in general. Our digital tools are largely ill-equipped to capture, interpret, or transmit this rich non-verbal data. We are forcing complex human interaction through a filter that strips it of its most essential context. And with LLMs and other tech, this problem is not going anywhere, or perhaps getting worse. We need to address: what becomes of a society when the non-verbal aspects of communication are consistently lost? What would that society look like? This is precisely the space designers should be actively exploring and shaping right now.